EmbeddingGemma Changes Everything

Search has long abandoned keywords for vector-based understanding, and this is something I’ve been teaching clients for YEARS.

Google’s release of EmbeddingGemma proves that search has completely shifted to vector-based understanding. The fact that this is essentially a “miniaturized Gemini” (Google’s search AI) means we’re getting direct insight into how Google actually processes content, through 768-dimensional vectors, not keywords.

Mixed Intent Problem

Google uses specific attention patterns and “neural circuits” to evaluate:

- Quality assessment neurons – that evaluate content quality

- Entity-tracking heads – that follow concepts through text

- Domain expertise patterns – that recognize authoritative content

When content has mixed semantic intent, it’s literally confusing these neural pathways. The vectors scatter, attention patterns fragment, and the quality assessment circuits are unable to properly evaluate the content.

This is why you’ll never rank higher than 6th position, or worse, large language models ignore you.

Generic Intent Models

Imagine trying to explain heart surgery to someone using only everyday vocabulary. You’d lose critical nuance, precision, and meaning. That’s exactly what happens when generic AI models try to understand specialized content.

Generic AI models (like standard ChatGPT or Google’s base models) are trained on everything from recipes to rocket science. They’re jacks-of-all-trades but masters of none. When your food blog mentions “conversion,” it doesn’t know if you mean ingredient conversion or

- Currency conversion

- Sales conversion

- File format conversion

- Religious conversion

This confusion creates vector dispersion. Your content’s meaning scatters across semantic space because the AI can’t grasp your specific context.

When precision is lost, your rankings erode over time.

Custom Intent Models

Building custom Gemma embeddings, introduces a significant opportunity.

Custom Intent Models are AI systems explicitly trained on your industry’s language, concepts, and relationships. Think of them as hiring an expert Spanish translator who’s spent years in your field versus using Google Translate.

This past week, I helped a client train a model on 9 million keywords, including query expansion and 800 posts, to create a comprehensive vector map for their content, internal links, and link-building strategy.

Real-World Example

Generic Model sees “patient journey”:

- Maybe medical treatment?

- Could be customer experience?

- Possibly a travel blog?

- Confusion = poor performance

Healthcare Custom Model sees “patient journey”:

- Immediately understands: diagnosis → treatment → recovery

- Knows related concepts: outcomes, compliance, care pathways

- Recognizes intent: clinical information, not sales

- Clarity = excellent performance

E-commerce Custom Model sees “customer journey”:

- Understands: awareness → consideration → purchase → retention

- Knows related concepts: cart abandonment, LTV, conversion funnel

- Recognizes intent: sales optimization, not medical advice

- Precision = higher conversions

Think of training a custom model like training a specialized employee.

Query Fan-Out

Their breakthrough with generating “hundreds of semantically related query variations from a single seed” directly addresses the intent problem.

- How it works:

- Input: Core content intent

- Output: All query variations that share the same semantic intent

- Use case: Ensure content maintains coherence across all related queries

- Inverse use: Identify queries that would dilute intent if targeted

Mechanistic Interpretability

The real game-changer is understanding which neural pathways your content activates. I’ve developed methods to:

- Visualize the exact circuits your content triggers

- Identify where different sections activate conflicting patterns

- Pinpoint when mixed intent breaks the neural flow

- Optimize for the specific patterns that drive rankings

It’s always been a matter of data and patterns, specifically unique patterns, which is why so many owners lost traffic over the last four years.

90% of owners rely on generic data and patterns, resulting in long-term losses. James copies William, trying to replicate success, or worse, thousands of owners copy an agency or company, trying to replicate success and consistently fail.

It’s about data and patterns unique to you, create your own unique semantic signature, and watch what happens.

Immediate Adjustments

- Audit for Vector Coherence

- Test your content using EmbeddingGemma (what Google uses)

- Compare vector clustering patterns

- Identify dispersion points

- Enhance Industry-Specific Understanding

- Train models on your specific domain language

- Use LoRA adapters for efficient fine-tuning

- Implement Matryoshka learning for scalable analysis

- Map Your Intent Network

- Create query expansion maps from core intents

- Find all semantically aligned variations

- Eliminate intent-diluting targets

- Cross-Validate Everything

- Test content across multiple models

- Ensure consistent vector coherence

- Validate against actual ranking patterns

The sites winning today aren’t gaming algorithms. They’re creating content with perfect semantic coherence that aligns with how Google’s neural circuits actually process information, including popular large language models like Chat and Claude.

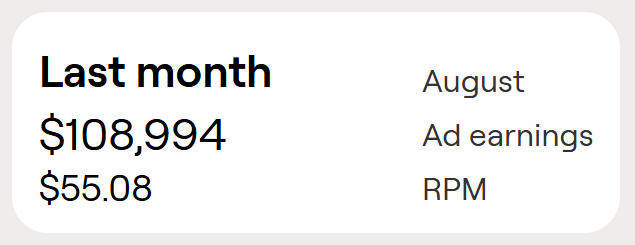

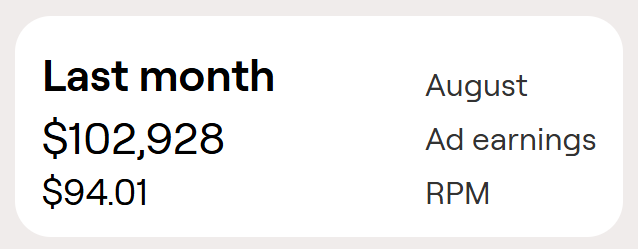

I’ll admit, we’re way ahead, and the owners I’ve worked with over the last five years are consistently setting new traffic and revenue records.

One of the clients above went like this:

|

Year |

Organic Traffic |

|

2022 |

148k |

|

2023 |

295k |

|

2024 |

856k |

|

2025 |

1.48m |

Moving forward, the gap between those who understand vector-based optimization and those still chasing keywords will only widen. The invisible has become visible, the complex has become actionable.